The GPU Cloud for AI

On-demand & reserved cloud NVIDIA GPUs for AI training & inference

.png?width=283&height=300&name=h100-ai-every-scale-single-hgx-bf3-2631633%20(1).png)

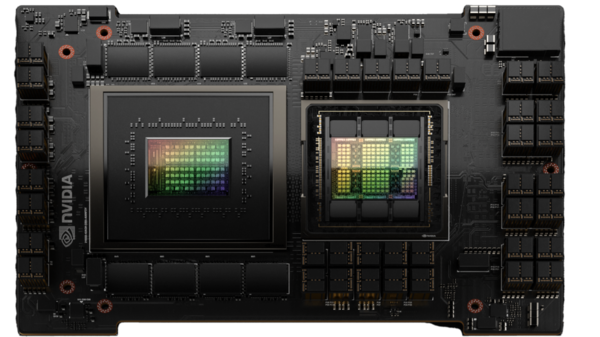

Lambda Reserved Cloud powered by NVIDIA H200

Lambda Reserved Cloud is now available with the NVIDIA H200 Tensor Core GPU. H200 is packed with 141GB of HBM3e running at 4.8TB/s. That's nearly double the GPU memory at 1.4x faster bandwidth than H100.

The only public cloud designed for training LLMs & Generative AI

On-Demand Cloud

Reserved Cloud

Starting at $2.49/GPU/Hour

NVIDIA H100s are now available on-demand

Lambda is one of the first cloud providers to make NVIDIA H100 Tensor Core GPUs available on-demand in a public cloud.

See how Voltron Data leverages Lambda Reserved Cloud

After completing an extensive evaluation on the cost-benefit analysis across all major cloud providers and various on-prem solutions, Voltron Data shares how the decision to partner with Lambda was based on the ability to deliver on availability and pricing in this compelling case study.

NVIDIA DGX™ SuperPOD Clusters deployed by Lambda

NVIDIA DGX™ SuperPOD

Turnkey, full-stack, industry-leading infrastructure solution for the fastest path to AI innovation at scale.

Lambda's datacenter

Lambda Stack is used by more than 50k ML teams

One line installation and managed upgrade path for: PyTorch®, TensorFlow, CUDA, cuDNN, and NVIDIA Drivers. Learn more about Lambda Stack.

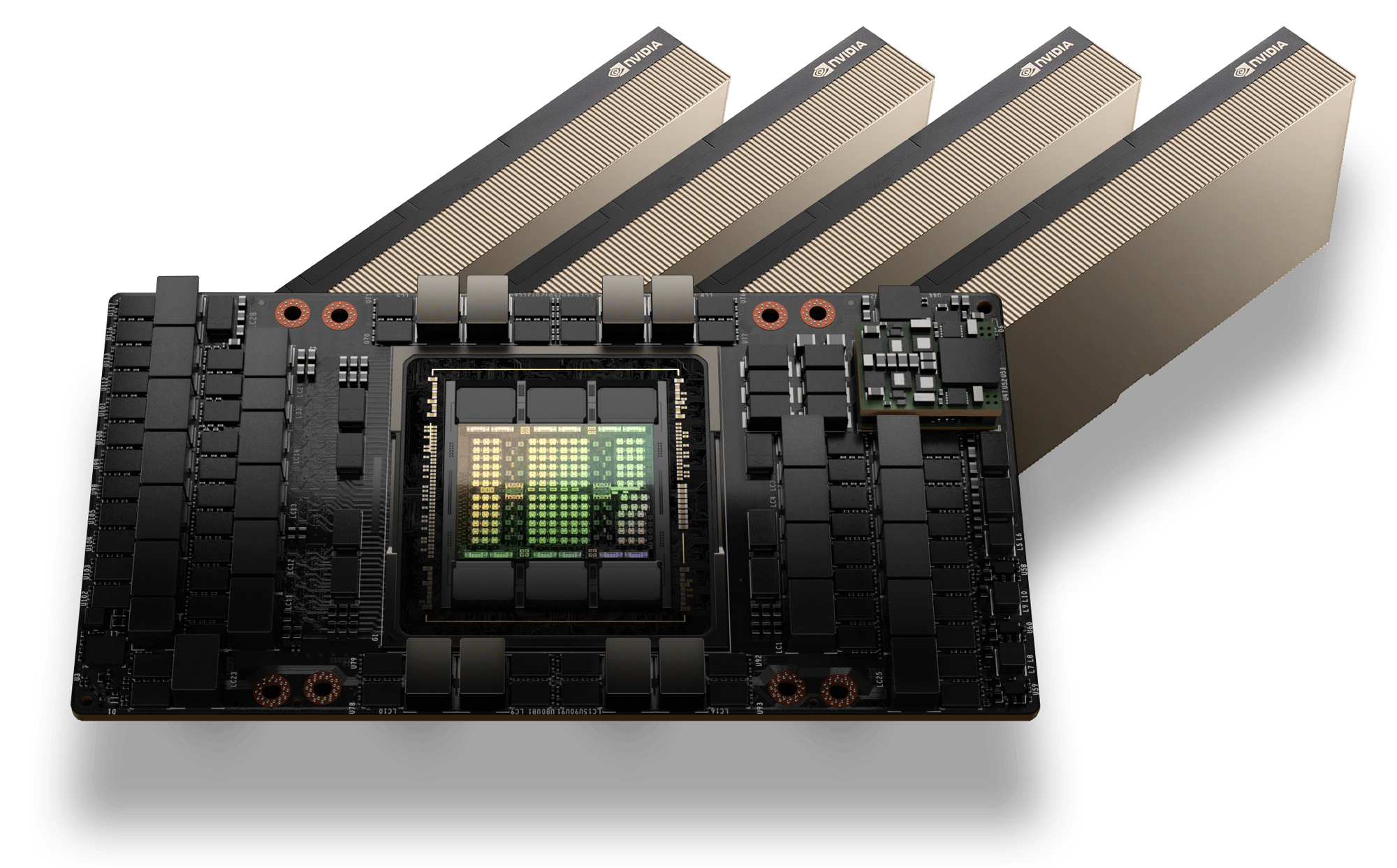

Lambda Reserved Cloud powered by NVIDIA GH200

Lambda Reserved Cloud is now available with the NVIDIA GH200 Grace Hopper™ Superchip. A single GH200 has 576 GB of coherent memory for unmatched efficiency and price for the memory footprint.